In August 2021 Apple computer announced that they intended to use their

power to control the software on customer owned devices that they manufactured

to deploy software changes which would make user's devices test their users

files against a database and then report matches to governments without

user's knowledge or consent with a stated purpose of scanning for

images of child abuse. These databases were to be sourced from largely

unaccountable quasi-government organizations.

Apple promoted the system as having powerful technical and

procedural privacy protections but these protections weren't

protections

for the users they were protections

from the users:

Their proposed system would have used powerful cryptography— yes, but it's

purpose was to conceal what images were being matched— even though the

scanning was being performed on the user's own computer and in doing so

shield Apple and their data sources from accountability over the kinds

of content they are matching. Apple promoted their review process as

protective but it was structured to bypass American users fourth amendment

protection against government search and seizure by having an apple employee

technically performing the search, protecting governments from user's

civil rights.

An automated search of your person (cellphone) and effects (desktops)

without any suspicion based on an secret list maintained by a governmental

and unaccountable quasi-governmental entities.

What could possibly go

wrong? I don't use apple products, but I share a world with people who do.

I got involved in the public discussion arguing against the technology in

principle. I found that some people argued that while some of the risks

I suggested were

theoretically possible, they weren't

practically

possible.

For example: people could have their privacy invaded due to lawful images

that were rigged to match against images in the database, or due to images

in the database surreptitiously rigged to match against lawful images connected to

targeted races, religions, and political views. Many people were

basically willing to regard the technology as magic and on that basis

assume it did what Apple said and only what they said. But almost

every story about 'magic' teaches the opposite lesson, one that's

apt for new and poorly understood technology.

By this point people had already extracted the scanning software

from Apple computers, and had used it to construct matches between real

images and obviously fake noise images. When these came up

in discussion they were dismissed on the basis that people wouldn't be

easily tricked into downloading noise images or that they wouldn't be

entered into the secret databases. I disagree, but it

also misses the point: the whole idea of the system was wrongheaded and

vulnerable and attacks only get better. So I analyzed their scheme

and discovered a way to turn

any image into a false positive matched for

almost any other image, without even seeing the other image only its

fingerprint used for matching. — a very flexible second preimage

attack, which is the most severe kind of attack on a hash function.

Unfortunately, I found that posting these examples only sent people down

a path of trying to suggest band-aids to fix the specific examples. Without

examples my principled concerns were too theoretical to some, once there were

examples others became too tunnel visioned about just those specific examples.

As a result I stopped posting examples, not wanting to contribute to

improvements that only cover up the flawed concept.

In my view, your personal computing device is a trusted agent. You cannot use the internet without it, and

even outside of lockdown (as this was in 2021) most people can't realistically live their lives without use of the internet.

You share with it your most private information, more so even than you do with your other trusted agents like your

doctor or lawyers (whom you likely communicate with using the device).

Its operation is opaque to you: you're just forced to trust it. As such I

believe your device ethically owes you a duty to act in your best interest,

to the greatest extent allowed by the law. — not unlike your lawyers obligation to act in your interest.

A lot of ink is being spilled these days around "AI ethics" but it seems to

me that the largest technology companies today struggle to behave ethically

with respect to simple computing technology we've had since the 80s. If we

can't even start from a first principle that a computer's first objective is

to serve their user what can any kind of computing ethics be except an

effort to, ultimately, exert control against other people under the

guise of merely controlling machines.

The existing mass scanning of users files onto commercial services— where it

has fewer ethical complications, since many users know they lose privacy when

they entrust their files to third parties—, in spite of supposedly getting

millions of hits per year has resulted in a comparatively negligible

number of convictions much less any evidence of actually protecting children

from harm.

All too often we deploy harmful non-solutions in the sake of urgency.

"Something must be done, this is something, therefore we must do it."

—politician's fallacy.

Fortunately, many technology luminaries adopted nuanced positions on

the risks and limitations of Apple's scheme and

published

position papers arguing

against it. Apple was convinced to abandon the program.

But, unfortunately, not before giving ideas to governments that don't consider civil

liberties particularly fundamental and inspiring a number of bad legislative proposals

which will periodically repeat for decades to come.

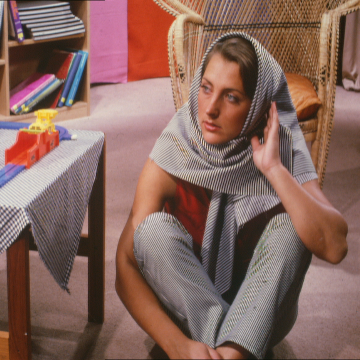

My technical efforts had the amusing result of giving my partner the ability to say that she appears

(literally) in a paper by a variety of respected figures. (page 29 of the above paper).

How my second-preimage attack was constructed:

Apple used a hash, a kind of digital fingerprint, to match images.

But to keep the hash consistent even if images were slightly altered they

built it from a neural network trained to do just that. But neural network

and hash are words that obviously don't belong together if you understand

anything about hashes and neural networks.

Neural networks are designed to be differentiable: They're a

mathematical function structured so you can efficiently compute how changing

the inputs will change the outputs, and one where changes usually have a more

or less smooth effect. This is necessary for trainability.

Cryptographic hashes, on the other hand, are designed to be maximally

non-linear, maximally non-differentiable-- any change should have a complex

and difficult to predict effect on the output.

So the obvious thing to do is run the hash, compute a direction to change

the image that will bring the output closer to the desired output and keep

repeating. That will produce a match but the result won't look like

a sensible image anymore. The solution is just to introduce a second

objective that continually biases the process back towards something that

looks reasonable, shaping the noise through negative feedback— a

standard digital signal processing technique. It was literally the first

thing I tried, and I spent more time figuring out how to use the machine

learning tools, since I'd never used them before, then I did working

on the actual problem. (though my latter images looked somewhat better

than my earlier ones as I twiddled parameters)

As I write this in 2023, I think this sort of solution would be

found even faster now that diffusion generative image models are so

popular, as they work through a similar (but much more powerful) iterative

denoising process and could probably by used to produce attack images that

look even more natural than the ones I produced.

Additional posts by me on the subject: